Introduction

In the ever-evolving landscape of computer vision, YOLOv8 (You Only Look Once version 8) has emerged as a powerful tool for object detection and segmentation. As businesses and researchers delve into the realm of artificial intelligence, understanding the YOLOv8 annotation format becomes crucial.

In this comprehensive guide, we’ll explore the intricacies of the YOLOv8 Annotation Format, providing a clear roadmap for efficient object detection and segmentation.

Before delving into the annotation format, let’s grasp the fundamentals of YOLOv8. YOLO, short for You Only Look Once, is an object detection algorithm that significantly speeds up the detection process.

The eighth version, YOLOv8, builds upon its predecessors, offering enhanced accuracy and faster processing. This makes it an ideal choice for applications ranging from autonomous vehicles to surveillance systems.

The Significance of Annotation

Annotation is the backbone of any object detection model, including YOLOv8. It involves labeling objects within an image, providing the algorithm with ground truth data for training.

Proper annotation ensures that the model can accurately identify and classify objects in new, unseen images. Now, let’s dive into the specifics of YOLOv8 Annotation Format.

YOLOv8 Annotation Format

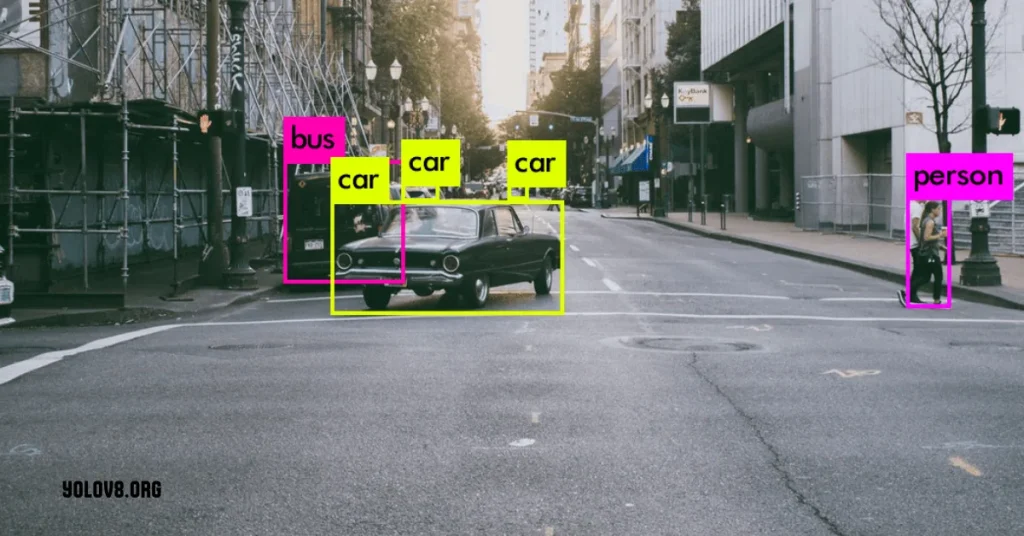

- Bounding Boxes: YOLOv8 relies on bounding boxes to delineate the boundaries of objects in an image. Each bounding box consists of four main parameters: the x and y coordinates of the box’s center, its width, and its height.

- Class Labels: Assigning appropriate class labels to objects is crucial for the model’s understanding. Each object in the annotation is associated with a specific class label, such as ‘car,’ ‘person,’ or ‘dog.’

- Confidence Scores: YOLOv8 introduces confidence scores, indicating the model’s certainty about the detected object. A higher confidence score implies greater certainty in the object’s presence.

- Multiple Objects: YOLOv8 excels in detecting multiple objects within a single image. The annotation format accommodates this by allowing multiple bounding boxes and class labels per image.

The Annotation Process

Now that we’ve outlined the key components, let’s walk through the annotation process itself. Tools like LabelImg or RectLabel facilitate this process by allowing users to draw bounding boxes around objects and assign class labels effortlessly. It’s imperative to annotate a diverse dataset to ensure the model’s robustness across various scenarios.

Best Practices for YOLOv8 Annotation

Before diving into the best practices, let’s briefly understand what the YOLOv8 Annotation Format entails. YOLO, or “You Only Look Once,” is an object detection algorithm that divides an image into a grid and predicts bounding boxes and class probabilities for each grid cell.

Annotation in YOLOv8 involves marking objects in an image with bounding boxes and assigning corresponding class labels.

1: Consistent Labeling Standards

Consistency is key in YOLOv8 Annotation Format. Establish clear and concise labeling standards for your dataset. Ensure that all annotators are well-versed in these standards to maintain uniformity across annotations. Consistent labeling facilitates model training and enhances the overall accuracy of object detection.

2: Balancing Quality and Quantity

While it’s essential to have an ample quantity of annotated images, quality should never be compromised. Emphasize the precision of object boundaries and accurate class assignments. Striking the right balance between quality and quantity ensures that the model generalizes well to unseen data.

3: Utilizing Bounding Box Adjustments

YOLOv8 allows for adjustments to bounding box annotations. Take advantage of this feature by fine-tuning bounding box positions to precisely encapsulate target objects. This meticulous approach enhances the model’s ability to detect objects in varied scenarios.

4: Addressing Occlusion Challenges

Real-world images often involve occluded objects, presenting a challenge for accurate annotation. Clearly define guidelines for annotators to handle occlusion scenarios. Use multiple annotations when necessary, indicating partial visibility of objects to ensure the model learns to identify them even in Ultralytics YOLOv8 challenging conditions.

5: Applying Randomization Techniques

Introduce randomization in your annotation process to mimic diverse real-world scenarios. This involves random placement of bounding boxes, variations in object sizes, and introducing background clutter. Randomization enhances the model’s robustness and prepares it for a wide range of applications.

6: Regularizing Class Imbalances

In datasets, certain classes may be more prevalent than others, leading to class imbalances. Regularize these imbalances by ensuring a proportional representation of each class. This prevents the model from being biased towards dominant classes, fostering a more equitable object detection performance.

7: Implementing Cross-Validation Strategies

Implement cross-validation techniques during the annotation process to gauge your YOLOv8 model’s generalization capabilities. Split your dataset into training and validation sets, allowing you to assess the model’s performance on unseen data and make necessary adjustments for improved accuracy.

8: Optimizing for Speed and Efficiency

YOLOv8 is renowned for its real-time processing capabilities. Using efficient tools and workflows, optimize annotation for speed and efficiency. Streamlining the annotation process ensures timely model deployment without compromising accuracy.

9: Version Control for Annotations

Maintain version control for annotations to track changes and improvements. This ensures a systematic approach to dataset evolution, allowing you to revert to previous versions if needed. Version control also aids collaboration among annotators, fostering a cohesive annotation strategy.

10: Continuous Iteration and Improvement

The field of computer vision is dynamic, and your annotation strategy should reflect that. Foster a culture of continuous iteration and improvement. Stay abreast of the latest advancements, update your dataset accordingly, and retrain your YOLOv8 model to leverage the latest enhancements.

Effective YOLOv8 Annotation Format is pivotal for unleashing the full potential of this powerful object detection framework.

By adhering to consistent labeling standards, balancing quality and quantity, addressing specific challenges, and embracing continuous improvement, you can ensure your YOLOv8 model surpasses competitors in accuracy and efficiency.

Implement these best practices, and propel your computer vision projects to new heights.

Overcoming Challenges in Annotation

Despite its effectiveness, annotation can pose challenges. Common issues include occlusions, overlapping objects, and ambiguous boundaries. Utilizing advanced annotation techniques and leveraging human expertise can help overcome these challenges.

Before we address the challenges, let’s establish a basic understanding of YOLOv8 Annotation Format. Annotation involves labeling objects in images to train the model for accurate detection. YOLOv8 relies on precise annotation for optimal performance, making it a critical aspect of the implementation process.

Challenge 1: Ensuring Consistency in Annotation Styles

One common hurdle in YOLOv8 Annotation Format is maintaining consistency across annotations. Variations in styles can lead to inaccuracies in the model’s learning process. To overcome this challenge, establish clear annotation guidelines, and provide annotators with comprehensive training to ensure a uniform approach.

Challenge 2: Dealing with Ambiguous Objects

Ambiguity in images, where objects overlap or have unclear boundaries, poses a significant challenge. Address this by incorporating a detailed annotation protocol that includes guidelines for annotating ambiguous objects. Additionally, use tools that allow annotators to highlight areas of uncertainty for further review.

Challenge 3: Scaling for Large Datasets

Scalability becomes a challenge for projects dealing with extensive datasets. Efficiently annotating a large number of images requires robust tools and methodologies. Invest in scalable annotation platforms that enable collaboration among annotators, ensuring a streamlined process for handling large datasets.

Challenge 4: Quality Control and Annotation Accuracy

Maintaining annotation accuracy is paramount for YOLOv8’s success. Implement a rigorous quality control process, including regular reviews of annotated data. Utilize feedback loops and ensure open communication channels between annotators and supervisors to address any discrepancies promptly.

Challenge 5: Time-Consuming Annotation Processes

Annotation can be time-consuming and affect project timelines. To optimize the annotation process, leverage automation tools and scripts to handle repetitive tasks. This not only accelerates the annotation workflow but also reduces the likelihood of errors.

Challenge 6: Choosing the Right Annotation Tools

Selecting suitable annotation tools is critical for efficiency. Evaluate tools based on their compatibility with YOLOv8, user-friendliness, and support for specific annotation types. Experiment with different tools to identify the ones that align best with your project requirements.

Challenge 7: Staying Updated with YOLOv8 Releases

Like any software, the YOLOv8 Annotation Format undergoes updates and improvements. Staying current with the latest releases is essential to taking advantage of enhanced features and optimizations. Regularly check for updates and integrate them into your workflow to ensure optimal performance YOLOv8 Annotation Format.

Challenge 8: Managing Annotator Workloads

Balancing workloads among annotators is crucial for maintaining quality and efficiency. Implement a workload management system that distributes tasks evenly, considering annotator expertise and project requirements YOLOv8 Annotation Format.

Challenge 9: Handling Class Imbalances

Class imbalances, where particular objects are underrepresented in the dataset, can impact model accuracy. Mitigate this challenge by prioritizing balanced sampling during annotation and employing techniques like data augmentation to address class imbalances.

Challenge 10: Future-Proofing Annotations

As technology advances, future-proofing annotations become vital. Ensure annotations are documented comprehensively, following the YOLOv8 documentation, to make it easier to adapt to evolving model architectures or new annotation standards.

Overcoming challenges in the YOLOv8 Annotation Format requires a strategic approach. By addressing issues related to consistency, ambiguity, scalability, and quality control, among others, you can enhance the effectiveness of your YOLOv8 implementation YOLOv8 Annotation Format.

Stay proactive in adopting the latest tools and techniques, and always prioritize the quality of your annotations for optimal object detection results.

Conclusion

In conclusion, mastering the YOLOv8 annotation format is a pivotal step toward harnessing the full potential of this cutting-edge object detection algorithm.

By understanding the nuances of Minimum bounding box algorithms

As you dive into object detection and segmentation, remember that a well-annotated dataset is crucial for success in YOLOv8 segmentation, setting the foundation for advancements in computer vision.

FAQS (Frequently Asked Question)

Q#1: What is the annotation format used in YOLOv8?

YOLOv8 employs the widely used annotation format, which includes a text file for each image in the dataset. Each line in the file represents an object instance and contains information such as the class label, bounding box coordinates (x, y, width, height), and optional additional attributes.

Q#2: How are class labels defined in YOLOv8 Annotation Format files?

Class labels in YOLOv8 Annotation Format files are represented by integers, typically starting from 0. The mapping of these integers to actual class names is defined in a separate file, usually named “classes.txt,” where each line corresponds to a class name, and its index represents the associated integer label.

Q#3: Can YOLOv8 Annotation Format files support multiple objects in a single image?

Yes, YOLOv8 Annotation Format files support multiple objects in a single image. Each object is represented by a separate line in the annotation file, providing details such as class labels, bounding box coordinates, and any additional attributes. This allows for the annotation of diverse and complex scenes with multiple objects.

Q#4: Are there any specific tools recommended for annotating datasets in YOLOv8 format?

While annotation tools are not strictly required, popular tools such as LabelImg, RectLabel, or VGG Image Annotator (VIA) can be used to create YOLOv8-compatible annotation files. These tools provide user-friendly interfaces for labeling objects in images and exporting annotations in the required format.

Q#5: Is there a specific order or structure to follow when creating YOLOv8 Annotation Format files?

Yes, YOLOv8 Annotation Format files have a specific structure. Each line typically represents an object instance and follows the format: <class_label> <x_center> <y_center> <width> <height>. It is crucial to maintain consistency and adhere to the YOLOv8 format specifications to ensure proper training and inference with the YOLOv8 model.

Recent Posts

- How to Get Bounding Box Coordinates YOLOv8?

- What is New in YOLOv8? Deep Dive into its Innovations

- Object Detection Python in YOLOv8: Guided Exploration

- YOLOv8 Segmentation: How YOLOv8 Makes It Accessible to All

- YOLOv5 vs YOLOv8: Which YOLOv5 and YOLOv8 Model Reigns Supreme?

I’m Jane Austen, a skilled content writer with the ability to simplify any complex topic. I focus on delivering valuable tips and strategies throughout my articles.